Firebase/GCP: save money by cleaning up your artifacts

Photo by Emil Kalibradov

Photo by Emil Kalibradov

I'm working on a personal project and I'm using the awesome Google Cloud Platform. There are so many things that you can do. All the tools that you may need are probably there. This of course comes with a price. In this article I'll share a tip how to reduce your spendings there.

Firebase functions

When I started the project I decided to use Firebase. That's a set of products by Google that rely on GCP infrastructure and are specifically targeted for app development. It's interesting that most of Firebase tools are actually fine-tuned GCP features. For example, Firestore files are visible in Cloud Storage section. The Firebase functions are actually Cloud functions and as such we have the same deployment mechanisms. They are getting build with containers. This means that we are using Cloud Storage too because those containers needs to be stored somewhere. So, it's all connected.

The problem

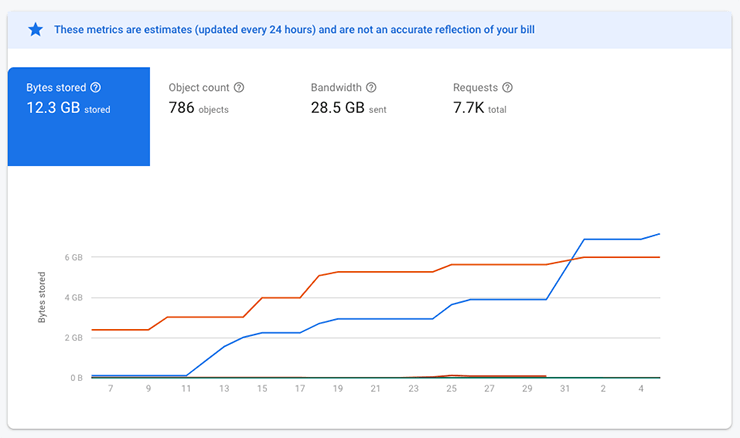

I was browsing my console when I noticed something weird. My bill for Firestore was a few cents. And that's weird because I thought that there is free quota and I'm not doing anything crazy. On top of that I'm the only user of my project. What will happen if there is real usage?

The graph shows that several Cloud Storage buckets are taking a few GBs. And those were artifacts.<project name>.appspot.com and eu.artifacts.<project name>.appspot.com. I started digging to understand what this is and I found that it's the deployment of my Firebase functions. Every time when I deploy I'm getting new artifacts and I have to manually clean them up. There is even a GitHub issue for that. In StackOverflow I also found a couple of similar cases. So, obviously that's a problem. I can't rely on manual clean up. I as well plan to use Cloud Build for some long-running tasks and this will create even more artifacts.

A quick fix that didn't work

Every Cloud Storage bucket has lifecycle rules. Here's what those are:

Lifecycle rules let you apply actions to a bucket's objects when certain conditions are met — for example, switching objects to colder storage classes when they reach or pass a certain age.

In a couple of places people recommend setting a rule to delete items in those buckets. Let's say "delete all the artifacts if they are 2 days old". This indeed works but deleting artifacts this way means broken builds later. Imagine how the deployment references a layer which is missing in the bucket. And I hit exactly that wall. So, this wasn't an option.

The solution

GCP has gazillion APIs and instruments. One of them is gcloud cli. It gives us access to (I guess) everything on the platform. I saw a small script that goes over the images in a bucket and deletes containers. This looked promising. I decided to give it a go and wrote my own version using node. In its essence the code does the following:

- Loops over Cloud Storage repositories

- Finds all the container images

- Finds the metadata about tags and digests for the specified container image

- Ignores the ones with tag

latest - Leaves 2 of the last container images and deletes everything else (where the number 2 is configurable)

The script assumes that it's running in environment where gcloud is set up and configured. It looks like that:

const KEEP_AT_LEAST = 2;

const CONTAINER_REGISTRIES = [

"gcr.io/<project name here>",

"eu.gcr.io/<project name here>/gcf/europe-west3"

];

async function go(registry) {

const images = await command(`gcloud`, ["container", "images", "list",`--repository=${registry}`, "--format=json" ]);

for (let i = 0; i < images.length; i++) {

const image = images[i].name;

let tags = await command(`gcloud`, ["container", "images", "list-tags", image, "--format=json" ]);

const totalImages = tags.length;

// do not touch `latest`

tags = tags.filter(({ tags }) => !tags.find((tag) => tag === "latest"));

// sorting by date

tags.sort((a, b) => {

const d1 = new Date(a.timestamp.datetime);

const d2 = new Date(b.timestamp.datetime);

return d2.getTime() - d1.getTime();

});

// keeping at least X number of images

tags = tags.filter((_, i) => i >= KEEP_AT_LEAST);

for (let j = 0; j < tags.length; j++) {

await command("gcloud", ["container", "images", "delete", `${image}@${tags[j].digest}`, "--format=json", "--quiet", "--force-delete-tags"]);

}

}

}

(async function () {

for (let i = 0; i < CONTAINER_REGISTRIES.length; i++) {

await go(CONTAINER_REGISTRIES[i]);

}

})();

Where the command function is spawning a gcloud process.

function command(cmd, args) {

return new Promise((done, reject) => {

const ps = spawn(cmd, args);

let result = "";

ps.stdout.on("data", (data) => result += data);

ps.stderr.on("data", (data) => result += data);

ps.on("close", (code) => {

if (code !== 0) {

console.log(`process exited with code ${code}`);

}

try {

done(JSON.parse(result));

} catch (err) {

done(result);

}

});

});

}

I've created a gist here so you can copy the full version easily.

If you have the skill to write bash scripts here are the commands:

# finding images

gcloud container images list --repository=<your repository>

# getting metadata

gcloud container images list-tags <image name>

# deleting images

gcloud container images delete <image name>@<digest> --quiet --force-delete-tags

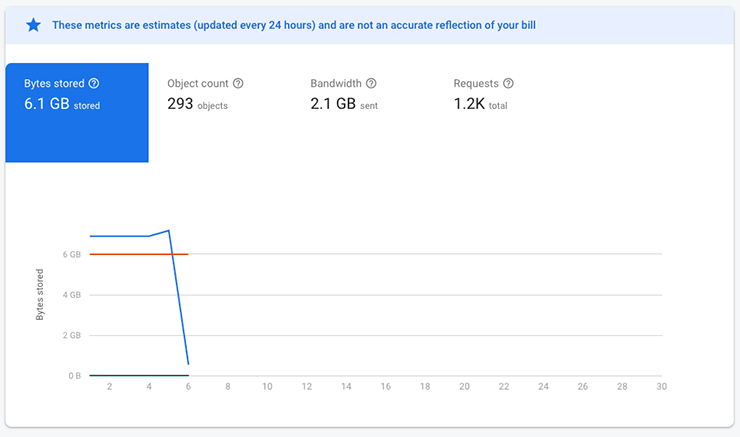

Why this works

As we mentioned above, deleting files straight from the Cloud Storage bucket is not a good idea. That's because we have to know exactly which artifacts are safe to be removed. Deleting images cleans up all the artifacts connected with them. At least that's how I understand it and that's the effect on my project.

And with that I'm happy to proceed. As part of my deployment workflow I'm running this script and I make sure that my storage usage is low. Which means less money spent. Which means more budget for cool stuff. 😎